The Human Side of AI in Healthcare: Why Trust Matters More Than Technology

Imagine this...

You’re not feeling well. You go online to book a doctor’s appointment and the first available slot is 59 days away. Nearly two months. Now imagine if an AI tool could look at your symptoms, run a check against thousands of similar cases, and immediately tell the doctor whether you need to be seen today—or next week. That’s not science fiction. It’s already possible. But here’s the catch: most people don’t fully trust it yet.

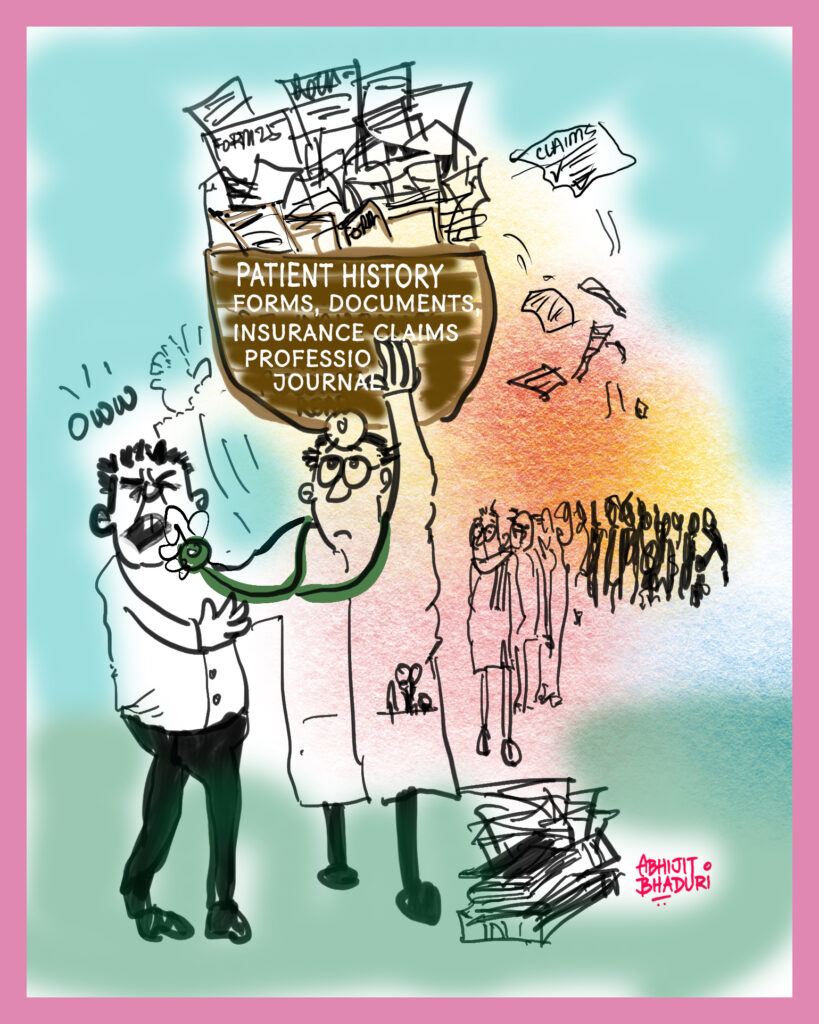

My friends who are doctors say that the amount of paperwork they have to do every single day is a leading cause of burnout. All that was in response to my question, “What books are you reading?” That is so sad.

A new report from Philips reveals something important: while doctors are increasingly optimistic about the role of AI in healthcare, patients—especially those over 45—are far less sure. It’s a trust gap. And it’s one we can and must fix.

Let’s explore why trust is missing, what it’s costing us, and how we can bridge that gap—not with more technology, but with more humanity.

Read the report: <click this>

We are living longer & that strains the healthcare system

Globally, healthcare systems are under enormous pressure. We’re living longer. Chronic illnesses like diabetes and heart disease are rising. At the same time, there’s a growing shortage of doctors and nurses. Many are leaving the profession altogether—burnt out, frustrated, and feeling like they no longer make a difference.

Imagine trying to row a boat across a lake, only to realize there’s a leak—and half your energy goes into bailing water just to stay afloat. That’s what it’s like for healthcare workers today. A big part of their time is spent not on treating patients, but on paperwork and pulling together data.

How AI Can Help (If We Let It)

AI has the potential to plug that leak. It can take care of administrative tasks, speed up diagnosis, and even predict which patients are at risk before symptoms appear. For doctors and nurses, this means more time for what really matters—caring for people.

But here’s where the story takes a turn. Even though the tools exist, people are hesitant. And understandably so. When your health is on the line, you want the comfort of a trained human, not a machine. Many older adults in particular say they feel uneasy about AI making decisions about their care.

This isn’t just about fear of technology. It’s about feeling seen, heard, and safe.

Why Trust Is So Hard to Build

Let’s look at this through the lens of human behavior.

From an anthropological view, we’ve always trusted people over machines. For most of history, healing happened in close-knit communities, guided by personal relationships. A village healer knew your family, your story, your struggles. That kind of trust doesn’t transfer easily to software.

Psychologically, we also have something called “ambiguity aversion”—we don’t like making decisions when we don’t understand the rules. And most people don’t know how AI works. If you don’t know how it reaches its conclusions, it’s hard to feel confident in its advice.

Socially, we’re also living in a moment of deep information fatigue. Between misinformation online and polarized media, people are increasingly skeptical of systems. That skepticism spills over into healthcare too.

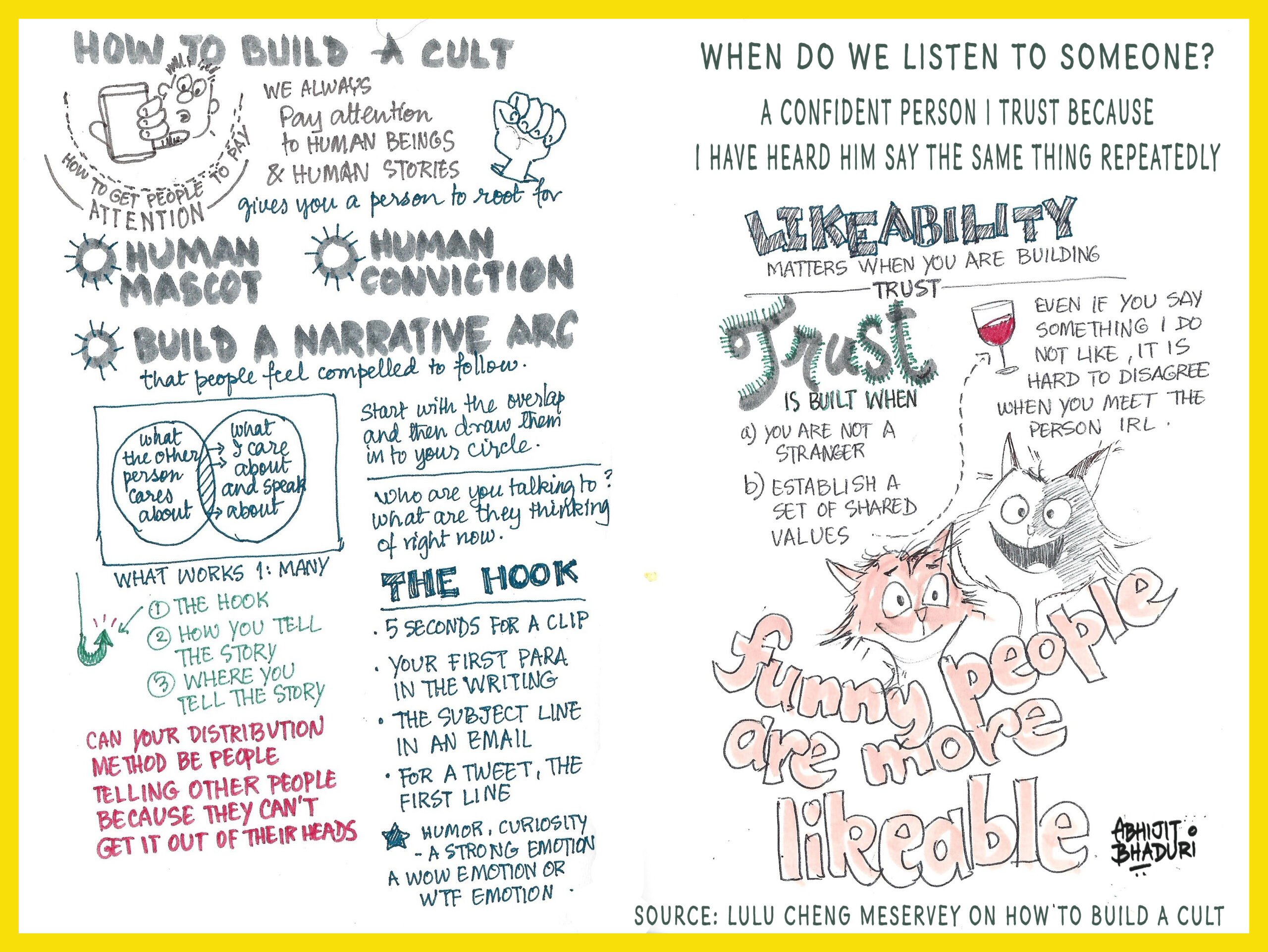

So, it’s not surprising that patients say they trust AI far more when it’s explained by their doctor or nurse, not a news site or an app.

What’s the Cost of Waiting?

Delaying AI adoption has real consequences. Patients wait longer to get care. Doctors lose hours each week to admin work. Burnout rises. People drop out of the system.

And over time, something else happens—something harder to measure but just as damaging. People lose faith. In the system. In their ability to get better. In the promise of better health.

We can’t let that happen. And we don’t have to.

What We Can Do, Together

Let’s shift the conversation. From “AI will replace your doctor” to “AI can give your doctor more time with you.” Here’s how we start:

1. Keep People at the Center

Any AI tool in healthcare should be built with input from patients and professionals. Not just tested in labs. When people are part of the design, they trust the outcome more. And the technology works better because it fits into real lives, not just ideal workflows.

Think of it like designing a new kitchen. You wouldn’t let a robot place the stove in the hallway. You’d ask the cook what they need.

2. Be Transparent, Not Technical

Patients don’t need to know every algorithm. But they do need to understand what the tool is doing, why it’s being used, and how their doctor is still involved. Short videos, simple leaflets, or even a 30-second conversation can go a long way.

Instead of saying “AI is reviewing your scans,” say, “This tool compares your results with thousands of others so your doctor can spot anything unusual, faster.”

3. Let Doctors Be the Bridge

People trust their doctors. More than apps. More than institutions. That trust can be extended to AI—if the doctor believes in it and explains it. Training healthcare professionals to become translators—not just users—of AI is crucial.

Make it part of medical education. Make it part of daily workflow. Reward it.

4. Create Spaces to Experiment Safely

Hospitals should have the freedom to try AI tools in safe environments—like test kitchens for chefs—before rolling them out system-wide. This helps work out the kinks and shows staff and patients how things work in practice, not theory.

These “regulatory sandboxes” are already being used in sectors like finance and transportation. Healthcare should adopt them too.

5. Celebrate Stories of Impact

People remember stories, not stats. Share real-life examples of how AI caught a tumor early, reduced wait times, or gave a nurse back an hour a day. Use faces, not just numbers. This builds emotional connection, which builds trust.

This Is Bigger Than Healthcare

These lessons don’t just apply to hospitals. AI is making its way into schools, workplaces, even your living room. The same principles hold:

- Start with human needs.

- Build in trust, not just speed.

- Let people stay in the driver’s seat—even if AI is helping steer.

A Future Worth Believing In

In many ways, we’re standing at a crossroads. One path leads to faster, smarter care—but only if we trust the systems guiding us. The other path leaves us stuck with the same old problems, only worse.

Trust doesn’t come from technology alone. It comes from people. From empathy. From understanding. And from a shared belief that the future is something we can shape—not something that happens to us.

AI must helps us become more human, not less.

What do you think? Leave me a comment.